Unless you've been living under a rock for the past few months, you know about ChatGPT and how it's kind of a big deal. Of course it's only a "large language model" and all it really does in the end is (essentially) predict probable words (tokens) given what has been generated before and the context. But this is a moment... It's eery how much it can do and really shows artificial intelligence and can actually be really helpful.

While it can do silly things like give answers that make sense to like "write a song about blogging in the style of Taylor Swift" or "give a proof that there are an infinite number of prime numbers making sure that all sentences rhyme", it is also extremely useful in day to day work. It's really like having an intern working for you. For my work where you might spend a day figuring out how to get esoteric thing working -- like getting one technology to work with another -- you just ask ChatGPT and it really almost always solves it. And it's literally like having an intern in that it will fix its mistakes. You ask for something, it comes back with a pretty good first draft, you see that there's one issue or a case that was overlooked, you point that out, and it comes back with an improved version. It's crazy.

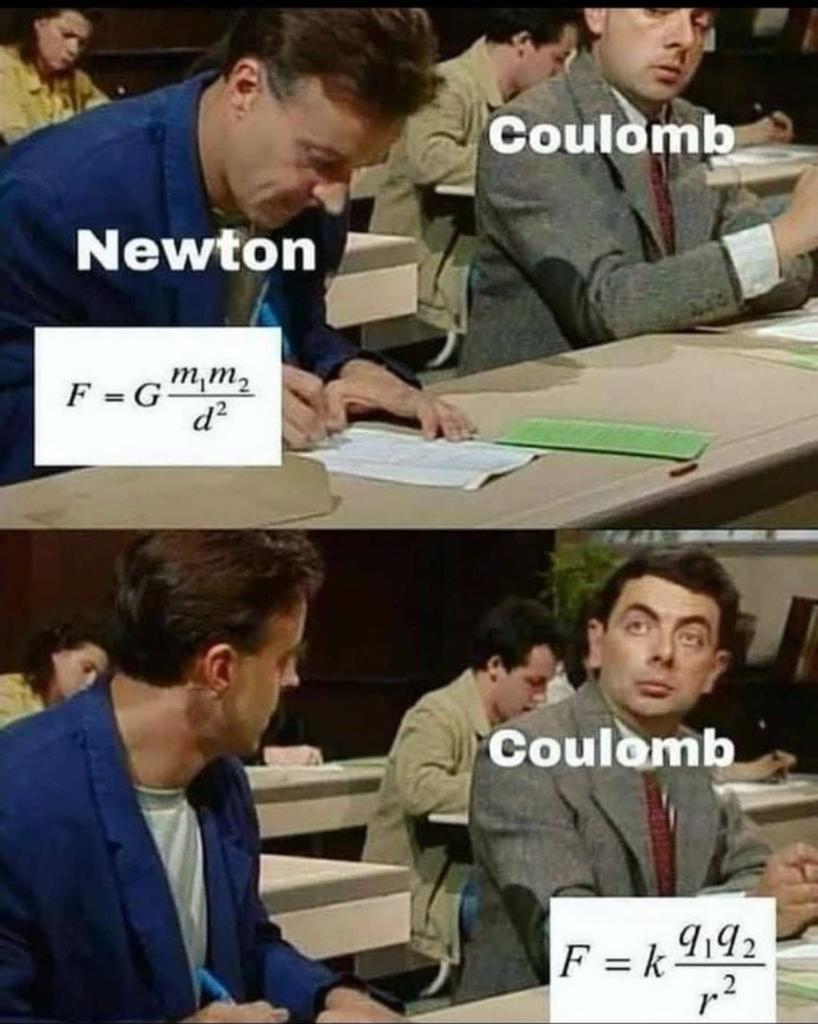

And it seems to somehow understand humour (to a certain extent) as well. It can look at images, understand what's going on, and then explain them. Look at this real example... Here is a picture I shared with a friend:

It is a corny Physics joke. My friend showed the image to GPT, and said "please explain this joke". Here is the output:

The important thing to remember is that all of this is "learned" simply by analyzing billions and billions of images and texts from the internet and finding (in a very structured and complicated way) parameters that help to predict the next word fragment (by using word fragments as "tokens" instead of full words, the model can even invent new words that it has never seen before). So it's finding statistical correlations that exist throughout all the writing, etc. that has been produced by millions of different people over a huge amount of time in different domains and it's been somehow all sucked up into a model.

Now the question is, why does this work? One of the ways that the model works is that it doesn't always predict the most probable next token. That would not tend to result in very interesting output. Instead, it samples from the probability distribution over tokens that is setup given the context so far. And though the interface to ChatGPT abstracts all this away, one can tell the model how "random" it should be by changing the "temperature" of the probabilities so that we can explode or diminish the large probabilities. With a low temperature, we explode the probabilities and the model will be very boring and be extremely likely to predict the most common token next. With a high temperature, it's the opposite and all the probabilities get closer to each other and it becomes much more random.

As for why it's able to respond in this conversational way, that is a second step in training the model where the parameters are adjusted based on training with "humans in the loop". At first the model is likely very unconstrained and then you slowly teach it to behave in a certain way. So you ask it to do something, and if it's close to what you want, you give it positive feedback, and if it's not at all how you want it to respond, then you give it negative feedback. Obviously the way you approach this is very specific and technical and I don't know the exact details, but eventually the model settles into a state where it's able to answer as you see above. There are other things that I'm sure they are doing that involve pre-processing the text. So if you asked something that they don't want to talk about like crime or whatever, then they might have another model to predict that it's something we don't want the main model to even see so they filter it out and give a canned response back like "I don't know too much about that".

So anyways, what does it actually mean? I think like many things, in the end, it's a tool. Noam Chomsky has a good opinion piece in the NYT recently: "The False Promise of ChatGPT". Basically, it's not intelligence; but, we already knew that, right? I should note here that (obviously, based on what's written above and also based on the wide range of learning material) large language models like ChatGPT make mistakes and often they make mistakes that no human that had actual intelligence would make. Things like inventing stuff that never happened or being confused about timelines or just really inventing concepts or places. Of course this can happen because it's just drawing tokens from a probability distribution!

But I was talking with a friend recently about how since large language models just look at what's happened before, they completely rely on work that humans have done and just essentially regurgitate it in a different way, so they could never come up with something new. But he responded with: isn't that exactly what humans do? And maybe he's right. Like the series "Everything is a Remix" which explains that all new art/creation is necessarily based on things that happened before. So who knows... maybe we're all doomed?

No comments:

Post a Comment